Intercepting fast rolling balls with omnidirectional soccer robots

Introduction

This article is a bit different as the others. It does not focus on a single technology or a single aspect. I rather try to show how complex problems can be solved structurally, shown by an example of the AI-development of the robot soccer team TIGERs Mannheim (https://tigers-mannheim.de/).

Robot Soccer

RoboCup (aka the robot soccer world championship) was established in 1997. As of 2018, in the league we are playing at (the SSL league) are 8 vs. 8 robots playing against each other in a soccer game, similar to real soccer. The current field dimensions are 12m x 9m. The field size was roughly doubled compared to the previous years. The robots use omnidirectional wheels, they are very agile and use a central AI to control them. The ultimate goal of the RoboCup initiative is stated as follows:"By the middle of the 21st century, a team of fully autonomous humanoid robot soccer players shall win a soccer game, complying with the official rules of FIFA, against the winner of the most recent World Cup." A video and more information about the project can be found in my about-page

CAD model of the TIGERs Mannheim soccer robot (2019 version)

The Problem

In this article I want to focus on a very specific, but still very complex Problem of the robot soccer AI developed by the TIGERs Mannheim team. To give you an idea about the complexity and pace of the game take a look at the following video. The video shows two consecutive passes, followed by a goal shot from the yellow team. The blue robots try to disrupt the offensive yellow robots and to defend the goal.

The problem that I want to talk about is the "selection of the offensive robots". The software has to continuously determine the "offensive robots", this are the robots that are supposed to move towards the ball to interact with it. While this sounds like a very simple task, it actually is not.

**Note: If you are only interested in the final approach, go to the chapter: "The current way". **

Past Attempts

To find a good approach for this problem it is always helpful to consider past approaches and the advantages and disadvantages of those post attempts.

The first approach, distance to the ball:

This was a very simple approach and also the first one we implemented. Choosing the offensive robot by finding the robot with the min distance to the ball. This is very easy to implement and also very robust. There is no toggling or other strange effects. However, it will often not choose the robot that can reach the ball in the shortest time. Especially when the ball is moving or the robots have a high current velocity.

| (+) good | (-) bad |

|---|---|

| simple to implement | bad results when the ball is moving |

| robust | ignores actual time to get to the ball |

| ignores velocities | |

| ignores ball movement |

Improved approach, fastest robot to reach the ball:

The first improved version of the first approach was it to not consider distances to the ball, but to consider the times that the robots need to reach the ball position. However, this approach already requires a full physical movement model of the robots to calculate the time that a robot needs to reach a certain position (x,y,w) from another position (x,y,w). To calculate the robot trajectories the current state of the robot is taken into account, which also includes the current velocity and acceleration of the robot, as well as all of its movement constraints. While this approach has obviously some advantages it also comes with some drawbacks. Since the algorithm strongly relies on the velocity information of the robot, this approach is a lot more unstable than the previous one. Velocities are difficult to measure and thus very noisy. This leads to toggling between the robot selections and thus requires high thresholds to avoid rapid toggling between two robots with similar "ball reaching times"

| (+) good | (-) bad |

|---|---|

| still simple to implement | bad results when the ball is moving |

| more likely to find "the best robot" | ignores ball movement |

| unstable |

Now... which approach is actually good?

This question is not easy to answer and was actually one of the biggest problems when trying to improve the algorithm. It was never clear whether the algorithm actually yielded better results after a change. There are so many different situations, it is almost impossible to manually check all of the situations after an code update. There has to be some metric to determine the "suitability" of a new implementation. That is when I started implementing the testing framework...

Automated Testing

As mentioned before, we have to figure out a way to determine if changes made to the algorithm are "good" or "bad". Therefore, a series of integration tests have been created. In fact, an entire testing framework.

The framework makes it possible to create and then export a "situation" from the GUI to a json file. The unit-test framework then takes this json file to recreate the stored situation. Then the entire simulation of the AI-framework starts and checks if a specified outcome in the given situation occurs. Relevant parameters are. The time to catch the ball, the time to reach the travel line of the ball. The stability of the target position and if the robot was actually able to catch the ball. By the time around, 15 test situations have been created, which test a lot of the different situations that can occur in a real game. Thus, automatically testing many of the important cases of the algorithm under test.

The current way

Now, with a good testing framework and metric to measure the "suitability" of an algorithm we can actually figure out what approach works good and which approach is not suitable. Therefore, we come to the final and currently used approach. To make it simple, the current approach samples many points along the ball travel line. For each point a robot-trajectory is calculated to determine the time in which the robot could reach this given position. Then we calculate the time that the ball needs to reach this point by using our internal ball model. Then, we can calculate the "slack-time" that a given point has. A negative slack time means that the robot reaches the position before the ball does.

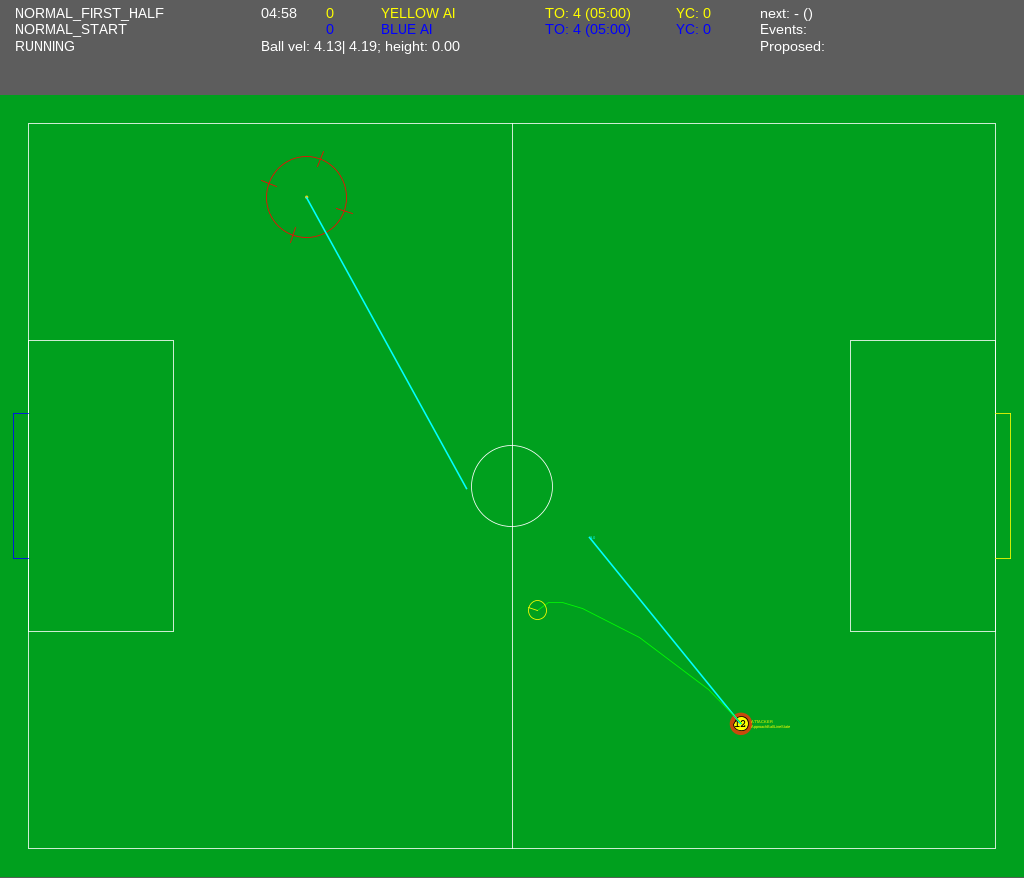

Lets look at the following situation:

Blue lines show the current velocity of the ball (~4m/s) and the robot (~2.5m/s). Green circle shows the movement target of the robot.

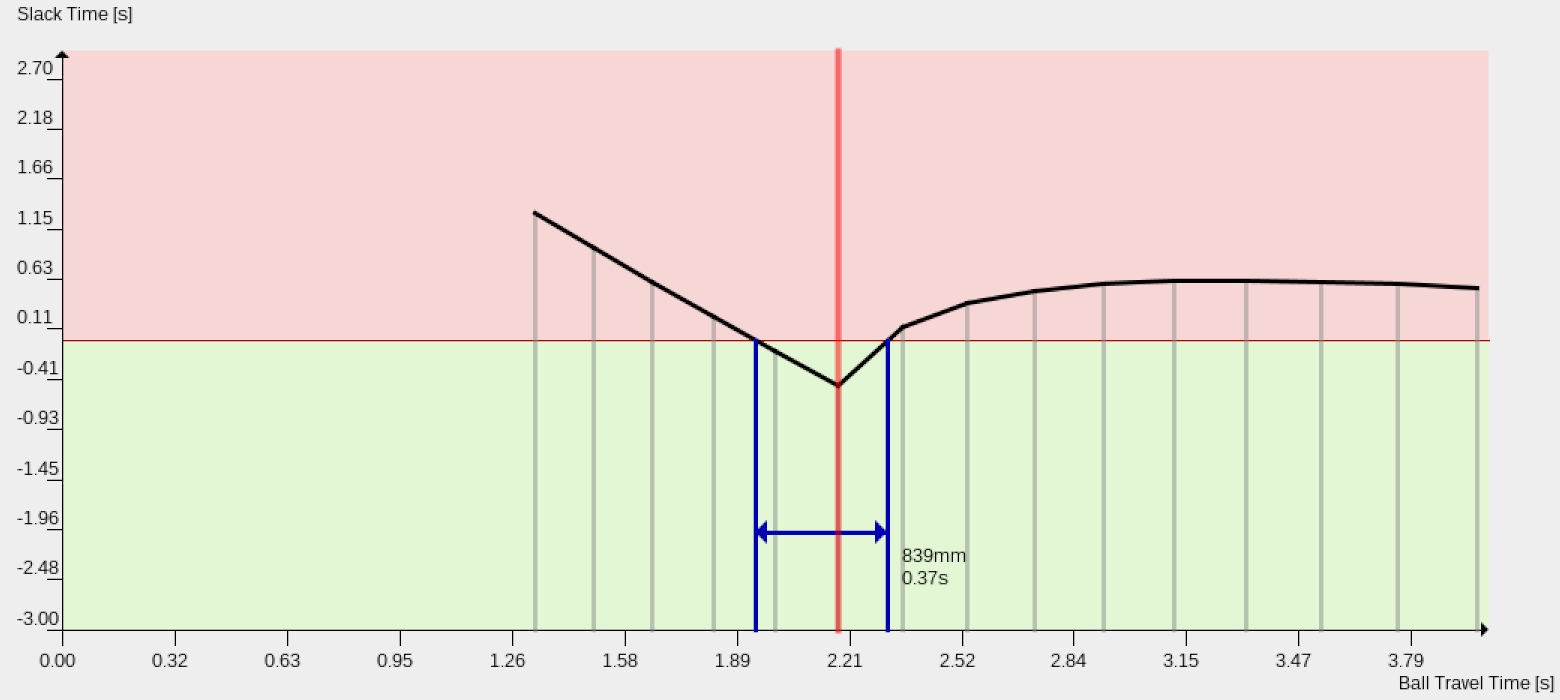

Plotting the slack times of the situation above results in this graph:

Calculating the best interception position.

The graph gives us some very interesting insights. In most situations in which a ball is rolling towards the robot there are two time slots (interception corridors) where the robot can actually catch the ball. Usually one small time window to catch the ball close to the robots current position and one large time window far in future, when the ball is getting so slow so that the robot can actually overtake the ball.

From here on the algorithm is pretty simple. The robot will try to move towards the first reachable corridor (negative slack time) that meets some requirements (corridor width > 0.2s, slack-time < -0.2s). The selected corridor also gives us a ball travel time (x-axis), which we can use to feed the ball model to calculate a target position to actually catch the ball. This will be done for each robot on the field. The robot that can catch the ball the fastest will be selected as the primary offensive robot.